OK Computer

My first foray into AI research assistants

A quick housekeeping note before today’s post: I’m finding it hard to publish BookSmarts as regularly as I’d like, so I’ve added an alternative to paid subscriptions. If you are a free subscriber and want to show your appreciation for a post, you can now buy me a coffee. Or you can share it with a friend. Or simply keep reading. I’m just glad you’re here.

I have tried, probably harder than most journalists, to pretend that AI doesn’t exist. I haven’t had the bandwidth to truly grapple with what generative AI means for writers—and the fate of the human species—so I’ve basically been ignoring it. At this point, I’m probably the only writer I know who hasn’t experimented with ChatGPT.

But this week, I’m running the first installment of a two-part series on AI research assistants. There are a growing number of tools out there marketed at researchers of all types and I got curious about how they worked.

Today, I’ll share what I learned about how these tools perform when you ask them a specific question. In a future post, I’ll look at their ability to summarize and interrogate a specific document, like an academic paper.

To be clear, I still have a lot of qualms about AI and its rapid integration into everything we do online. In just the last few weeks, there have been sobering stories about how AI summaries are throttling traffic to news sites and how the power demands of computing centers are driving a surge in greenhouse gas emissions. Cool, cool.

Even so, it seems better to know what this stuff is all about that keep hiding under a rock.

This is, by necessity, a very superficial overview. I’ve only just started experimenting with these tools, and I’ve probably only scratched the surface of what they can do. However, my dabbling revealed some useful lessons about the potential benefits and pitfalls of AI research assistants. Off we go.

What are these tools?

There are probably others out there, but here, I’m focusing on Elicit, Perplexity, SciSpace, and Scite.

Most of them can do some version of the following:

Answer a general query

Expand on that answer with follow-up questions or additional analysis

Summarize a reference document (Scite does not offer this feature)

Save your work for later (if you create an account)

How much do they cost?

Elicit, Perplexity, and SciSpace offer free versions with some limitations, as well as paid subscriptions in the ballpark of $10/month.

Scite costs $20/month after a free trial, or $12/month with an annual subscription.

And do they work??

Ah, that’s the real question, isn’t it? For my (admittedly simplistic) experiment, I asked each research assistant the same question: “when did grass evolve?” I chose this question because, at this point in my book research, I know enough to fact-check the answer. And because scientists’ thinking on the matter has changed over time, and I wanted to see how well these tools would capture that shift.

So…drumroll…here’s how each assistant did:

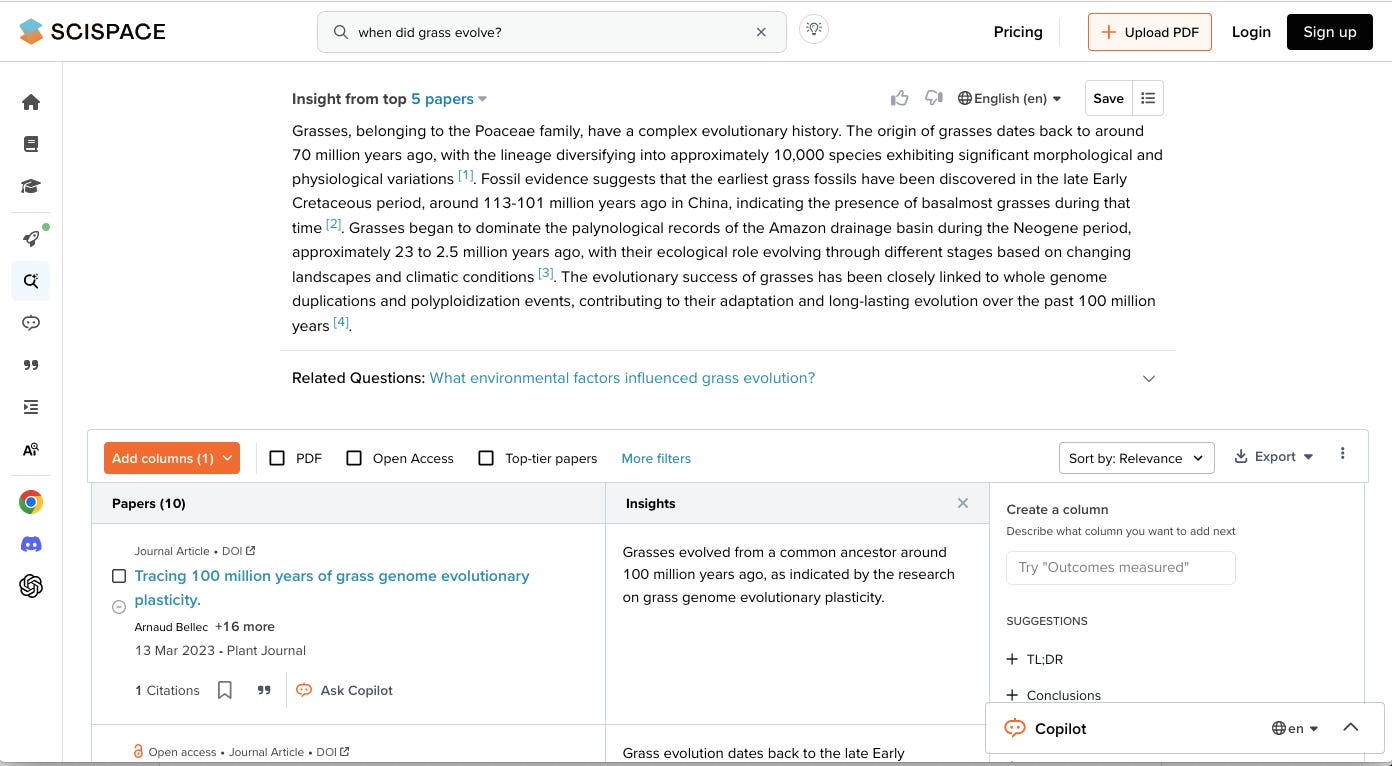

SciSpace, which claims to help users “discover and analyze research without limits,” gave the worst response. It provided an outdated answer (that grass first originated 70 million years ago) then followed it up with a correct but completely contradictory fact (that the oldest grass fossils date back more than 100 million years). You don’t have to be a scientist to know that doesn’t make sense. This was followed by a random detail about when grass spread through the Amazon basin. My grade: D.

Elicit (tagline: “Analyze research papers at superhuman speed”) performed little better. Perhaps moving too fast for its own good, Elicit didn’t deign to answer my question at all. It noted only that grass evolution was “a complex and multi-stage process” (true but not that helpful). Then it offered some additional details, including the true but self-evident fact that grasses are key to establishment of grasslands… I’d give it a C.

Perplexity (an “AI search engine designed to revolutionize the way you discover information”) gave a broad but not incorrect answer that grass originated in the Cretaceous period. It then offered a simple and generally accurate summary of how grass became such a dominant component of Earth’s ecosystems before suggesting some reasonable follow-up questions. A solid B effort.

Scite (“Ask a question, get an answer backed by real research”) gave the most thorough answer, but still an imperfect one. It cited an outdated source that put the origin of grass in the wrong half of the Cretaceous (“upper” means “late” in geology-speak). However, it then gave a pretty comprehensive and accurate overview of grass evolution. The language was a tad technical for lay readers, but that’s not necessarily a dig. More than the others, Scite markets itself toward researchers in academia and industry who are more likely to understand jargon. A B+ answer—for the right audience.

Are there other ways to use these tools?

I’m so glad you asked 😉 Another reasonable—and potentially better—use of AI research assistants is as a way to find primary sources.

Google Scholar is a great place to start. However, it can be hard to get it to cough up the answer to a specific question, especially if you don’t know what lingo to put in the search bar to call up the relevant studies. I’ve often found myself wishing that Google Scholar could just read my mind and tell me exactly what I need to know.

Fortunately, that’s exactly what AI research assistants are designed to do.

All of the tools I tested listed the references used to generate their answers, and backed each individual statement with a citation—meaning they can also function as way to conduct a literature search. And surprisingly, when graded this way, the ranking came out completely differently:

Elicit, which draws from Semantic Scholar’s library of 125 million academic articles, and SciSpace, which draws on more than 200 million studies, both referenced reputable papers—the ones I would probably point to if asked—as well as a few I had missed in my research. They also both gave helpful 1-2 sentence summaries of each reference in clear, accessible language.

Scite also pulls from a large corpus of technical papers. However, it did not provide plain-language summaries of the studies. It just highlighted the relevant quote from the original text, which was often full of jargon and devoid of context.

Finally, I was alarmed to see that Perplexity, which did reasonably well in answering my question, cited no peer-reviewed studies at all, but rather, an assortment of websites including Reddit. With some digging, these sites could lead to reliable primary sources, but on their own, most would probably not pass fact-checking muster for a piece of nonfiction. (To be fair, Perplexity bills itself as an all-purpose search engine, not just one for sifting through academic papers.)

Enjoying this post? BookSmarts is free, but you can buy me a coffee or upgrade to paid if you want to support the newsletter.

TL;DR

Since I started working on this post, I’ve continued playing around with these tools, especially Perplexity, because it does not limit free users. And I have found that it’s a real mixed bag.

It seems to do well with very specific questions (“what grass grows in the Sahara?”) but it has also spit out answers that are flat-out wrong and would get you into real trouble if you took them at face value. For instance, Perplexity told me that a particular species of grass held the record for the highest vascular plant ever recorded. The problem was that the source it cited did not say anything of the sort, and the grass in question turned out to be a sedge…

Basically, my takeaway is that these tools are worth exploring, both as a way to find research materials and to answer simple questions that would be laborious to nail down through traditional means. However, I wouldn’t use any information they provide without triple-checking it first.

As always, I’d love to hear your thoughts!